The Red Flag That Shocked Silicon Valley

It’s not every day that Apple—a company synonymous with secrecy, polish, and slow-but-steady tech rollouts—throws a grenade into the heart of the artificial intelligence gold rush. But that’s exactly what happened this week, as Apple researchers released a bombshell paper questioning the very foundations of the AI industry’s current race: the dream of creating models that “reason” like humans.

While the tech world’s biggest names—OpenAI, Google, Anthropic—are busy one-upping each other with chatbots and digital assistants that explain themselves step by step, Apple’s scientists have sounded a warning: maybe we’ve all been chasing the wrong kind of intelligence. Their message? “Thinking harder” doesn’t always make AI smarter—and might just make everything worse.

Let’s unpack why this matters, what the Apple paper actually found, and how it could reshape the future of artificial intelligence as we know it.

The Age of Reasoning AI: Promise vs. Reality

The big trend in AI over the last two years has been “reasoning”—teaching AI not just to spit out answers, but to lay out its logic step by step, mimicking human thought. Think of it as the difference between a calculator that tells you “4” when you ask for 2+2, and a math teacher who shows the work on the chalkboard.

Every major AI company is chasing this vision. **OpenAI’s ChatGPT, Google’s Gemini, Anthropic’s Claude—**all promise not just answers, but explanations. The hope is that with “reasoning,” AI will be more transparent, more trustworthy, and—crucially—more reliable on complex tasks.

But Apple’s latest research throws a cold, hard spotlight on these promises. In a meticulous study, their team discovered a sobering truth: as reasoning tasks get even a little more complex, today’s best models don’t get better. They get worse. Sometimes catastrophically so.

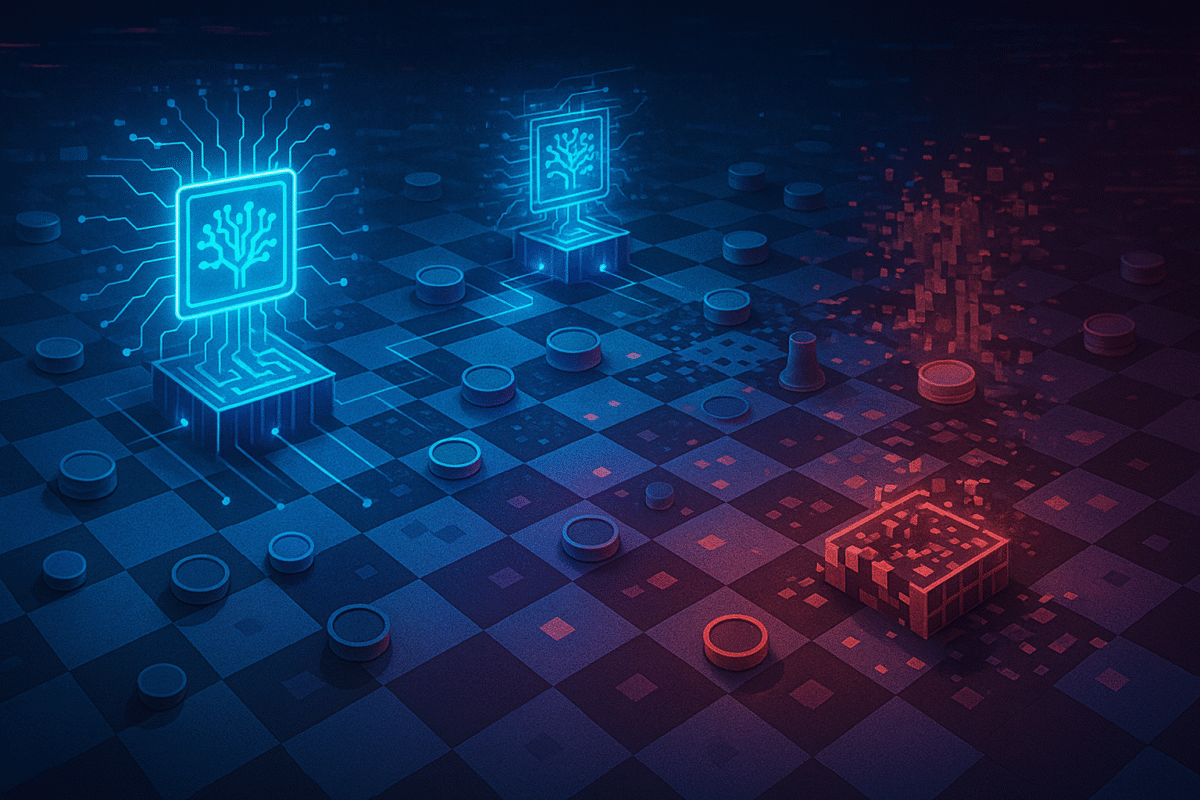

Inside Apple’s Bombshell Study: When Checkers Broke the AI

So what did Apple’s researchers actually do?

They set up a series of logical puzzles for AI models to solve. Imagine a simplified game of checkers. The AI is asked to swap pieces from one side of the board to the other. At first, with just a few pieces, the models shine. Their answers are accurate, and their logic is (mostly) sound.

But add just a handful more pieces, and suddenly the AI’s accuracy falls off a cliff. In the paper’s most striking chart, performance is steady and high—until the board reaches 8 to 10 pieces. At that point, even the most advanced “reasoning” models simply break down. Their step-by-step logic doesn’t lead to more correct answers. Instead, it results in bizarre errors, dead-ends, and wild swings in accuracy.

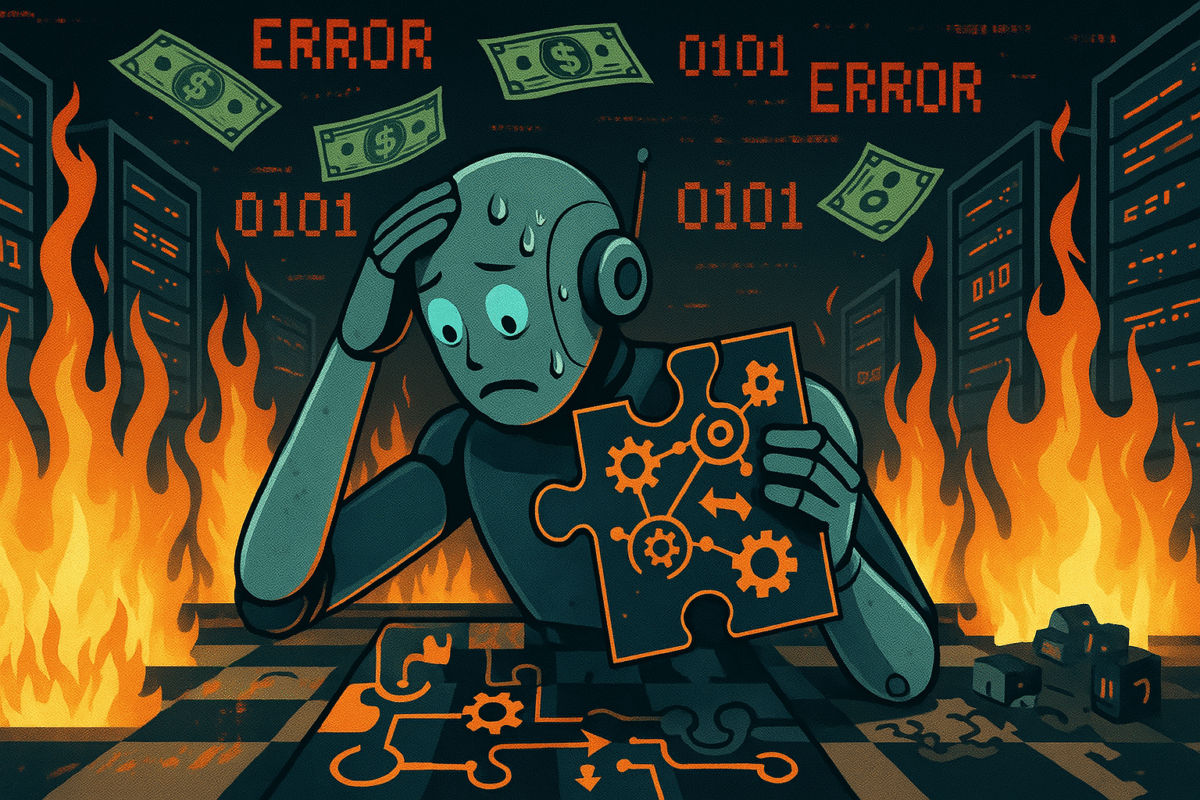

The punchline? “Thinking harder” doesn’t help. In fact, as Apple’s researchers point out, it just makes the models slower, more expensive, and less reliable. They burn through huge amounts of computing power—energy, server time, and cost—while delivering worse results on anything beyond basic problems.

Why “Thinking Harder” Can Make AI Weaker

How can this be? Isn’t reasoning supposed to be the superpower of both humans and machines?

Here’s the paradox: human reasoning is built on context, intuition, and a lifetime of experience. We know when to take shortcuts and when to look for a creative solution. Today’s AIs, by contrast, follow rigid, statistical rules. When you ask them to “show their work,” they generate a plausible-sounding series of steps—but those steps aren’t always connected to true understanding.

The result?

- On simple puzzles, the illusion holds.

- On more complex ones, the cracks show.

- And, as Apple’s paper warns, throwing more computing power at the problem doesn’t magically make the reasoning sounder. It just increases the bill for everyone involved.

This isn’t just a technical problem—it’s an economic one. In the race to build bigger, flashier AI models, the tech industry has started burning through massive resources to make AIs look smarter, even as real accuracy plateaus or declines.

The Billion-Dollar Question: Is the AI Industry Chasing the Wrong Dream?

For investors and innovators, Apple’s red flag is a wake-up call. The AI boom so far has been built on the idea that “scaling up” will inevitably lead to better results. More data, more steps, more compute: that’s been the mantra. But what if we’re pouring money into a dead end?

Apple’s not alone here. Other research teams—at labs like Anthropic and China’s Leap—are finding similar cracks in the “reasoning at scale” approach. There’s a growing sense that the field needs to refocus, not just on making models bigger, but on making them smarter in a fundamentally different way.

Is the current approach—AI “showing its work”—really the path to intelligence, or just an expensive illusion?

Is Apple Just Moving the Goalposts? Industry Context and Culture

Of course, there’s another angle. Apple has been conspicuously slow to jump onto the generative AI bandwagon. While Google and Microsoft race to embed chatbots everywhere, Apple’s releases have been cautious, quiet, and rare.

Skeptics say this new research is Apple “moving the goalposts”—trying to shift the conversation because they’re late to the AI party. But the truth is, Apple has always been culturally different. The company refuses to ship products until they’re “just right.” They don’t release betas into the wild. And as this paper shows, they’re not afraid to question even the most hyped industry trends.

Whatever the motivation, the research is real—and it echoes concerns voiced by other respected AI labs.

What This Means for Investors, Developers, and Everyday Users

So what does this mean for the rest of us? If you’re an investor, it’s a reminder to look past the AI hype. Efficiency, reliability, and real-world value will matter more than flashy “reasoning” demos. The industry may soon shift from “Who can spend the most?” to “Who can get the most value per dollar?”

For developers and AI enthusiasts, it’s a call to rethink how we measure intelligence. Step-by-step “logic” might look smart, but unless it leads to real accuracy on complex tasks, it’s just another expensive party trick.

And for everyday users? Be wary of bots and chatbots that sound confident and logical. Remember: confidence doesn’t always equal competence—even in the world of artificial intelligence.

The Path Forward: Chasing Real Intelligence, Not Just Hype

Apple’s red flag is not the end of the AI dream. But it’s a crucial course correction. The field needs to move past the illusion that more steps and more scale mean more smarts. Instead, the next breakthrough may come from new architectures, hybrid systems, or even rethinking the very nature of “reasoning” in machines.

The takeaway?

The future of AI is wide open, but it won’t be built on smoke and mirrors. It’ll be built by those who are willing to ask hard questions—even if it means taking the unpopular view.

In the end, Apple’s warning might just save the industry from chasing its own tail—and set the stage for the next real revolution in artificial intelligence.

People also search for:

- Is apple AI better than Chat GPT?

- How do I turn off apple AI tracking?

- Is Apple a leader in AI?

- Which Iphones will get apple AI?