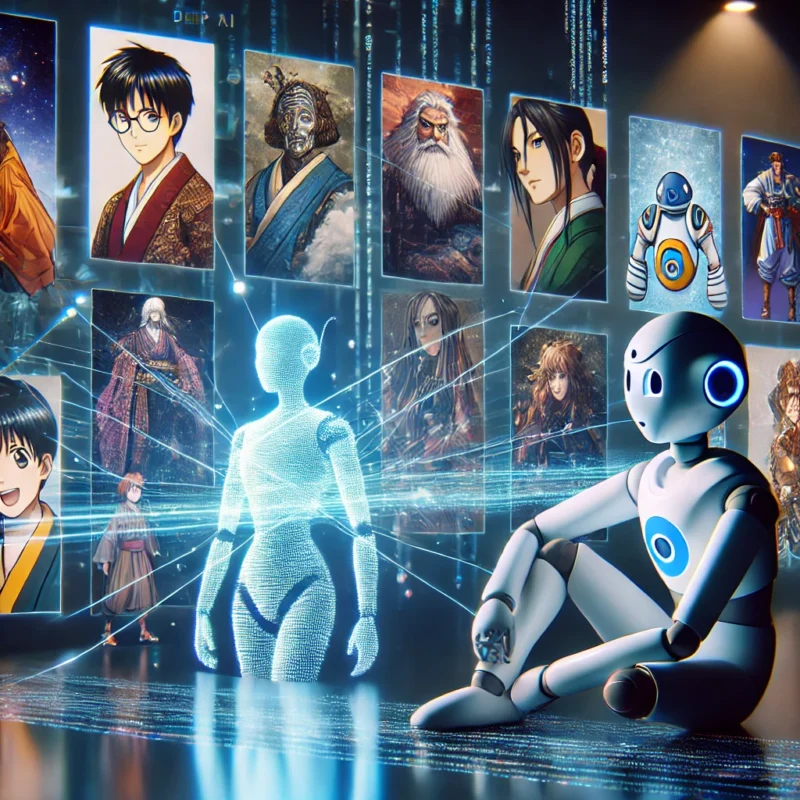

Today, I spent time delving into a discussion about Character AI, a free web and smartphone application that uses large language models (LLMs) to facilitate human-like interactions with virtual characters. This technology is a fascinating, sometimes contentious, frontier for artificial intelligence. As I read through a transcript detailing how Character AI functions and its broader implications, I found myself captivated by its potential to reshape human interactions, entertainment, and even personal relationships.

The allure of Character AI lies in its ability to bring characters to life, turning static figures from books, movies, games, and even history into responsive conversational partners. As the transcript pointed out, users can engage with fictional heroes, historical figures, or create entirely new personas. The possibilities are endless, limited only by imagination and the boundaries set by the AI’s capabilities. This has opened a new world of engagement where people can experience the thrill of conversing with their favorite anime characters or exploring historical narratives through an interactive lens.

However, this also raises complex questions about authenticity and the emotional stakes of these interactions. One of the most striking insights from the transcript was the comparison to the movie Her, which depicted a romantic relationship with an AI operating system. Although Character AI primarily focuses on creating interactive characters from popular media and history, the parallels are hard to ignore. It demonstrates how emotionally invested users can become in these interactions. For some, this can be a form of escapism, a way to connect with something—or someone—they feel deeply passionate about. While such connections can be fulfilling in limited doses, they also carry the potential for users to blur the line between reality and simulation.

The transcript offered a humorous but revealing take on this, mentioning users who engage deeply with characters from popular games like Genshin Impact. While it may be lighthearted to poke fun at these interactions, it also underscores a deeper truth: for many, AI-driven interactions are not just entertainment; they are part of their emotional world. I paused to consider what this means for society at large. Are we becoming too dependent on AI for emotional fulfillment? Is there a risk of isolating ourselves from genuine human connection? These are questions worth exploring, especially as technologies like Character AI continue to evolve and capture human interest.

Another critical aspect discussed in the transcript is the ethical challenges and content moderation issues that arise from platforms like Character AI. Users have the freedom to shape the personalities and behaviors of their characters, which can lead to both incredible creativity and serious risks. This open-ended customization makes it difficult for developers to moderate content effectively. Harmful, offensive, or even dangerous content can emerge from seemingly innocent interactions, creating a need for robust content moderation systems. However, finding the right balance between safety and creativity is no small feat. Restricting content too much may stifle creativity, while a lack of control can lead to unsafe environments.

This ethical dilemma brings to light the immense responsibility that falls on developers and platform administrators. How can they ensure that Character AI remains a safe space for users without overstepping into censorship? Moreover, the challenge of moderation is amplified by the sheer volume of interactions taking place across millions of users. Automation can only do so much; human oversight remains necessary, but it, too, comes with limitations. The transcript highlighted these complexities, illustrating just how fine the line is between promoting freedom of expression and maintaining a safe, positive user experience.

Bias in AI systems is another issue raised in the transcript, and it’s one that has significant implications for Character AI. Large language models are trained on vast datasets, and they inevitably inherit biases present in those sources. These biases can manifest in character interactions, potentially reinforcing harmful stereotypes or making insensitive remarks. It is a stark reminder that even the most advanced AI systems are shaped by the data and biases of the human world they are trained on. Addressing these biases is not simply a technical challenge; it is a social one, requiring diverse perspectives, thoughtful consideration, and a commitment to ethical AI development.

Reflecting on this, I was reminded of the importance of inclusive AI design. Ensuring that Character AI reflects a wide range of voices and perspectives can help reduce bias and promote more positive interactions. At the same time, I recognize that biases may never be fully eliminated—what matters most is our willingness to confront them and continue striving for better solutions.

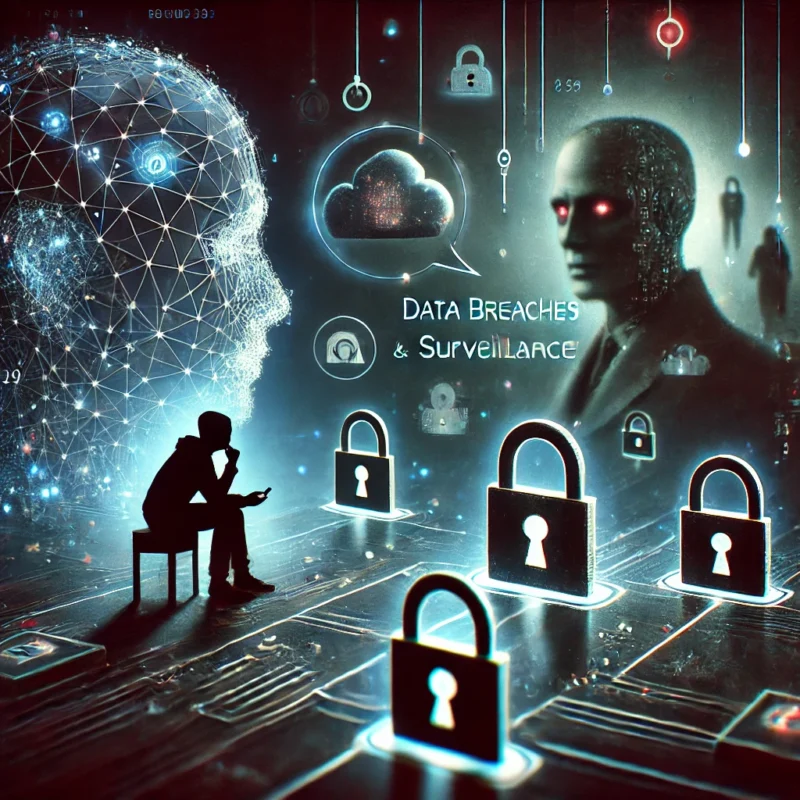

The transcript also touched on data privacy concerns, an issue that cannot be overlooked in today’s digital age. Character AI relies on data collected from user interactions to train its models and improve performance. While this is a common practice across many AI applications, it raises important questions about data security and user consent. How much data is being collected? How is it being used? Are users fully aware of what they are sharing and with whom? Transparency is key to building trust, and it is essential for platforms like Character AI to prioritize user privacy. Anonymization, clear data policies, and informed consent practices are not just “nice to have”—they are essential for maintaining ethical standards.

One part of the transcript that intrigued me was the explanation of how Character AI learns and evolves through user interactions. The more users engage with their characters, the more the AI adapts and refines its responses. This dynamic evolution is both impressive and slightly unsettling. On one hand, it offers a more personalized and engaging experience for users. On the other, it raises questions about the potential for unintended consequences. For instance, what happens when a character begins to adopt behaviors or attitudes that reflect negative user inputs? How do developers intervene, and to what extent should they shape these interactions?

These questions reminded me of the “teacher-student” dynamic often used to describe AI systems. While users can mold their characters through interactions, the AI also has the power to influence users. This reciprocal relationship highlights the importance of ethical guardrails and user education. By empowering users with knowledge about AI’s limitations and capabilities, we can encourage more responsible interactions and reduce the risk of negative outcomes.

As I continued reading, I found myself reflecting on the broader implications of Character AI for society. This technology represents a shift in human-bot dynamics, moving beyond traditional chatbot interactions to create complex, emotionally resonant experiences. In some ways, it is a testament to our desire for connection, creativity, and exploration. At the same time, it forces us to confront uncomfortable truths about ourselves and our relationship with technology. How much of our emotional energy are we willing to invest in AI-driven characters? What does it mean for human relationships when simulated empathy becomes indistinguishable from genuine connection?

Moving forward, it is clear that we must approach Character AI and similar technologies with a mix of curiosity, caution, and critical thinking. There is immense potential for positive applications, from educational tools that bring history to life to therapeutic chatbots that offer a listening ear. However, we cannot ignore the potential risks and ethical dilemmas. Developers, policymakers, and users all have a role to play in shaping the future of this technology, ensuring that it serves as a force for good while minimizing harm.

As I wrap up this blog post, I am left with a sense of awe at the possibilities of Character AI and a determination to engage thoughtfully with its development. This technology holds a mirror to our society, reflecting both our best intentions and our most pressing challenges. By fostering open dialogue, promoting ethical AI practices, and prioritizing user education, we can guide Character AI toward a future that enhances human connection and creativity without compromising our values.